[PKM 0005] 22W50 Graph Snapshot, Obsidian Illustrating Depth vs Breadth

I started writing this last week and I ended writing too much. After sitting on it for a week I decided to just post something.

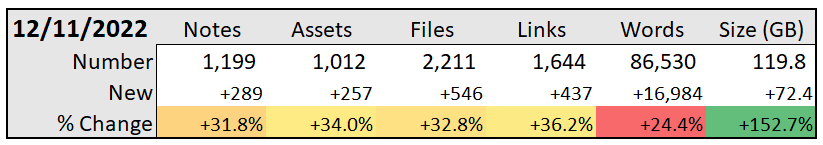

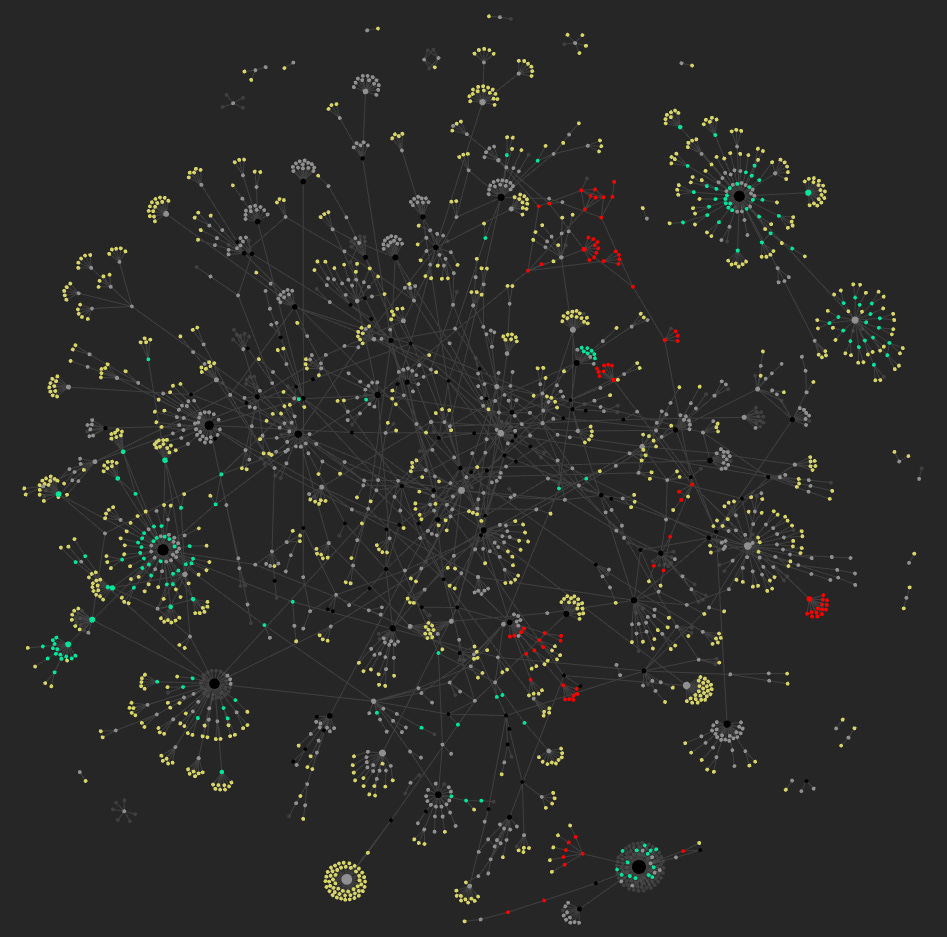

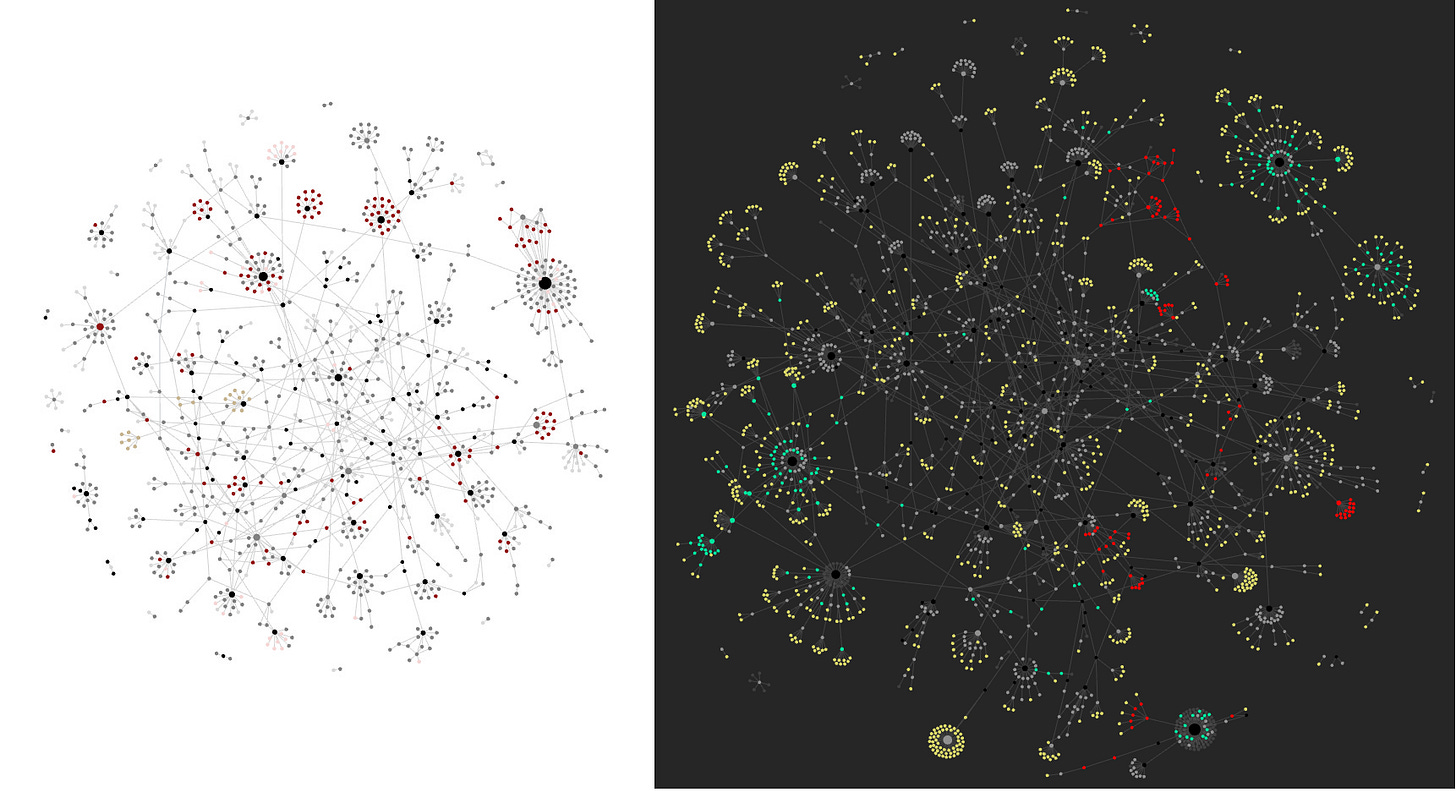

A picture being worth a thousand words can mean many things. To some people it's simply a hit of dopamine. To an AI that is trained off of these pictures it can mean everything (see the header image). Here is a picture of my graph with attached file enabled and there's something quite different from previous weeks and I find it visually pleasing about it. The notes have taken shape on their own and instead of a clustered mess like they were in previous weeks there's a blossoming outward--a flowering of notes.

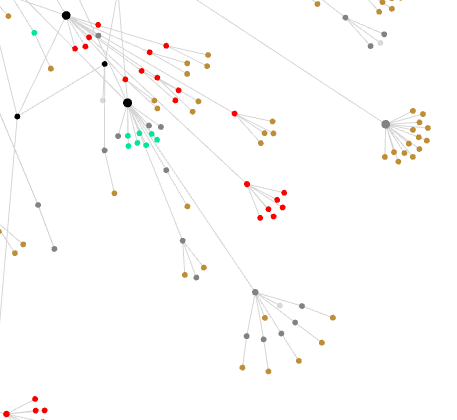

Last week I discussed how I changed my note title formatting and it has started top give me a bit of perspective especially now that I have been focused on one topic--AI and more specifically Stable Diffusion--for a good week or two. I've also been importing some of my old content from TheBrain, which was heavily structured. Both of these together have helped to reveal an interesting contrast between depth and breadth by how they are visualized on the graph. In this case Red notes are old notes that I've imported from TheBrain and Green notes are notes that are new notes related to Stable Diffusion and more broadly txt2img.

What you can see with both of the red and green notes is a blossoming that occurs from where the notes originate from. What is happening is with TheBrain is I have already connections from a previous lifetime and have already formatted the content after it's been written, so importing them creates levels of depth that would normally take a processing to develop. They're also primarily texts. Combined these two together and there is a tight controlled bloom or a nice tight clustering.

This contrasts quite differently with my Stable Diffusion notes where there is a much more intense blossoming because I am adding content quickly without too much thought and there are many more images--they are even quicker to add simultaneously adding much more and much less than words can result in a need for many of them to illustrate a point. In some cases, they are exponentially more useful than text because they immediately provide a frame of reference.

For example, how would you describe the Mona Lisa in writing? Simply stating, "A painted portrait of a female during the Renaissance by Leonardo da Vinci" makes sense to us, but that short description has an infinite number of combinations because of all that "white space" that is left up for interpretation because an AI given that prompt doesn't have the same frame of reference that we humans have. (This reminds me of a speaking event where Elon Musk--back when he was cool--spoke of how an AI could see us as an anthill and steamroll over us--Ultron basically.)

When are using the AI to create art we communicate with it through prompts, which is text we send to the txt2img diffuser to try to explain what we are trying to generate. Since the AI ins't a mind reader and can't see the vision in our eye it tries it's best with permutations only limited by our processing power and it's training. If you go back up and look at the header image that's the difference between two versions of trained data with the saying underlying model. This means that the more precise we are with our language the closer we can get to our results, but we can cause unintended consequences. There's a sweet spot where we give the AI enough guidance to create what we're looking for, but also enough freedom to creating something novel that surprises us.

All this rambling to say that this week's nodes are starting to take on the appearance of neurons that are trying to reach for the unknown as I explore more about AI. I think that we're on the cusp of a revolution here as we move from a consumption age or information age into a creation age. If you've been following the drama you can see many terrified artists who spent decades honing their creative craft only for an AI to generate hundreds of works similar to their likeness in the time it takes for them to produce one piece.

What we're seeing is instead of the truck drivers who we all thought would be jobless and taken over by self-driving cars we're instead seeing creatives who many previously thought were untouchable have a chunk of flesh taken out. On the flip side the amount of productivity growth is insane and you could even say that this is the democratization of artistic talent because now anyone with an imagination can bring that dream into reality... in some small form. What matters now is not how dexterous you are, but how imaginative and fantastical your ideas are. To use analogy: Soon, anyone can create a Rings of Power without a billion dollar budget, but only the select few will still be able to create a House of Dragons even with a billion dollar budget. That's why I'm so excited. Next week (I’m posting this from the future) will show that.